bagging machine learning explained

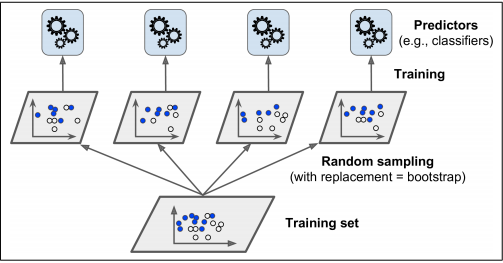

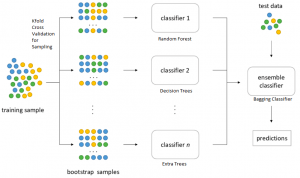

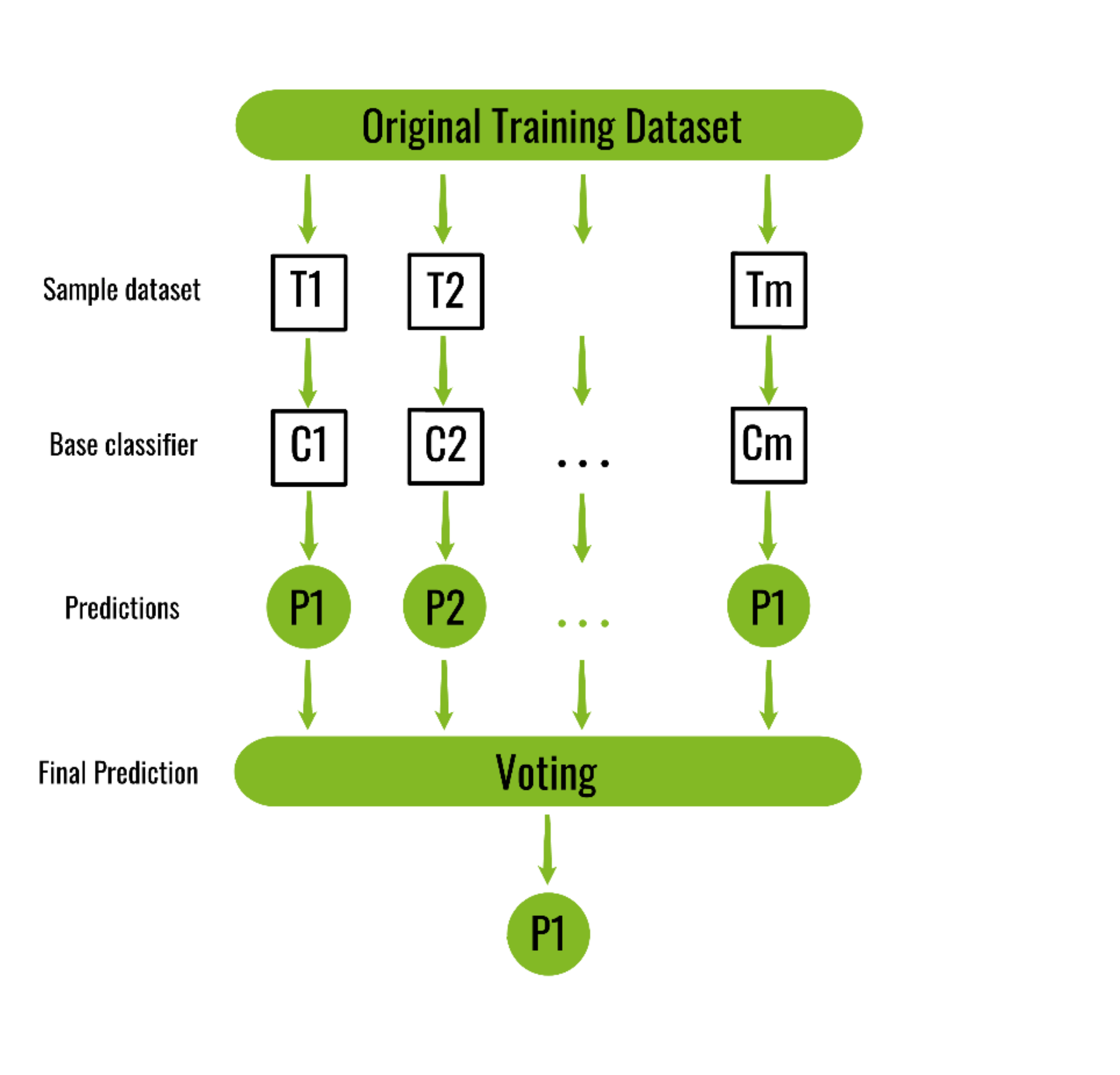

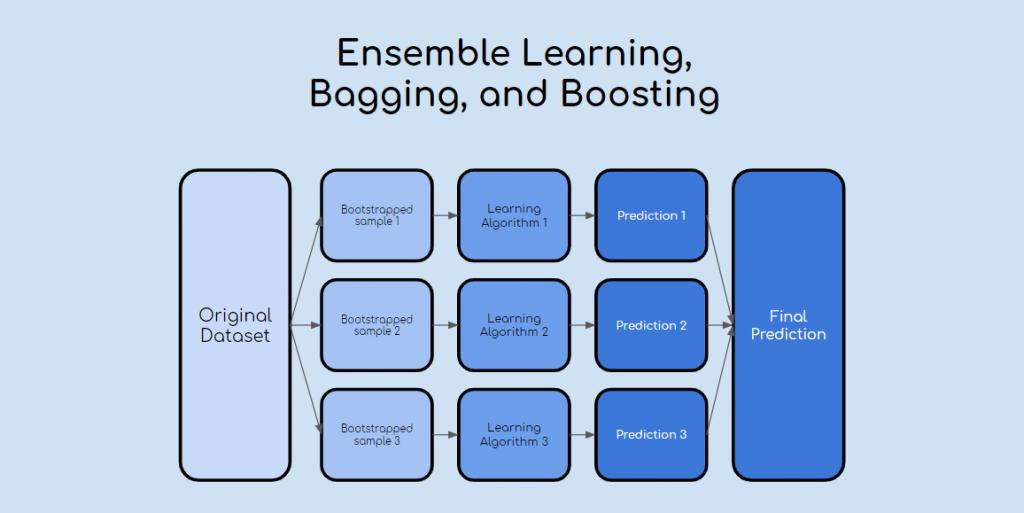

Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners. Working of bagging Main Steps involved in bagging are.

How To Use Bagging Technique For Ensemble Algorithms A Code Exercise On Decision Trees By Rohit Madan Analytics Vidhya Medium

Bagging can improve the accuracy of unstable models.

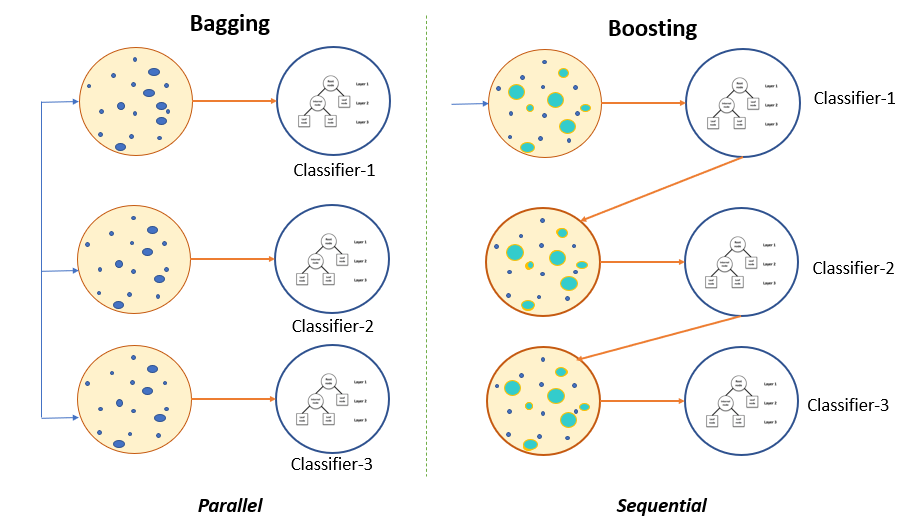

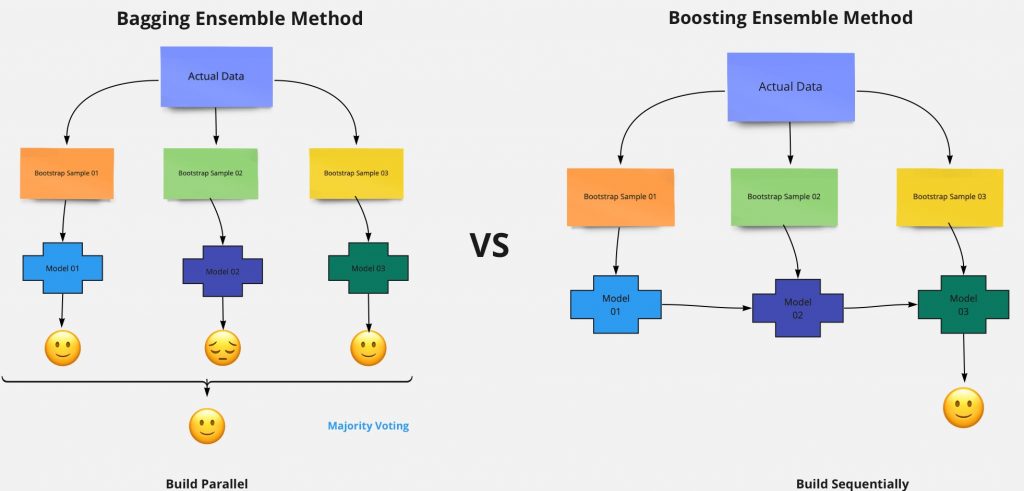

. As seen in the introduction part of ensemble methods bagging I one of the advanced ensemble methods which improve overall. Boosting and bagging are the two most popularly used ensemble methods in machine learning. Here is what you really need to know.

Ad Accelerate your ML journey with AutoML data-centric AI fairness and data quality tools. ML Bagging classifier. Ad Find Machine Learning Use-Cases Tailored to What Youre Working On.

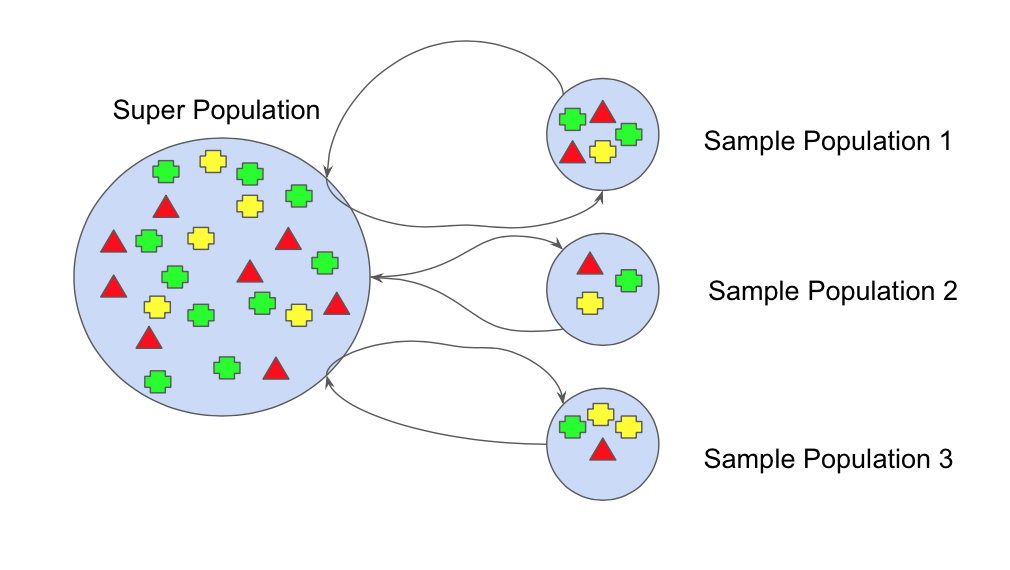

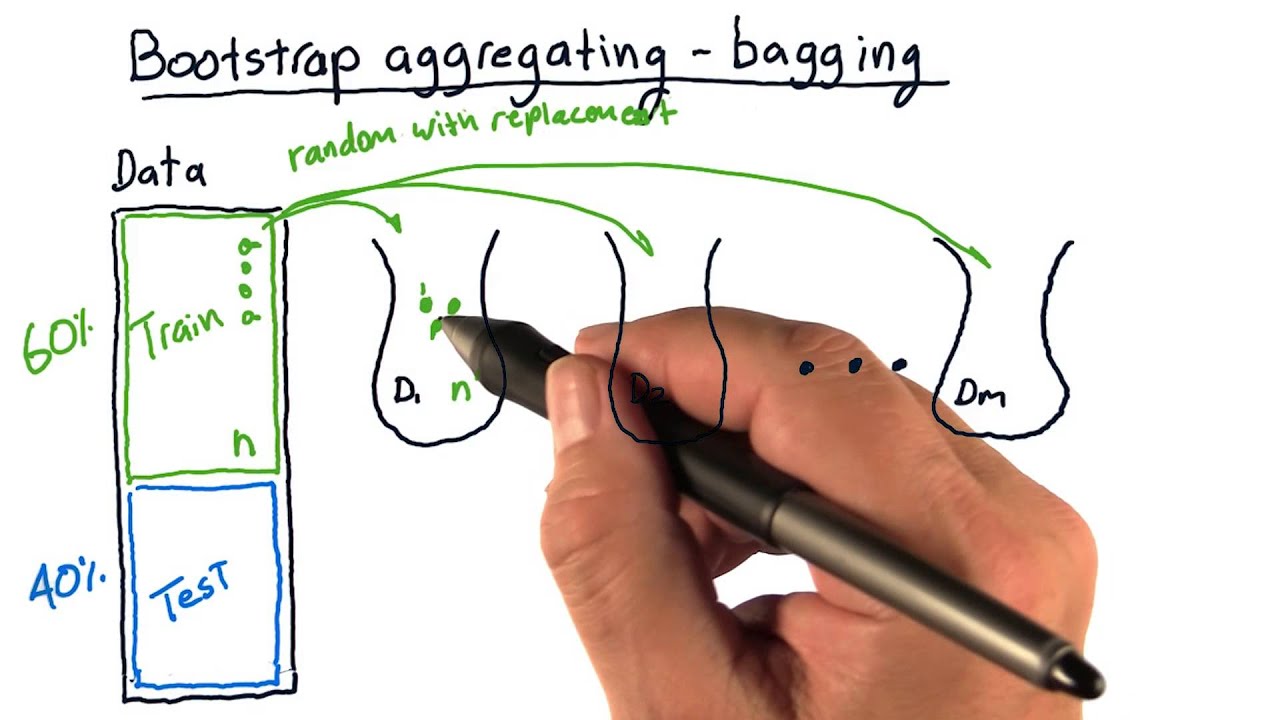

Bagging is bootstrap aggregating. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees. Sampling is done with a replacement on the original data set and new datasets are formed.

Get a look at our course on data science and AI here. Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. Bagging and Boosting are ensemble techniques that reduce bias and variance of a model.

Ad Learn What Artificial Intelligence Is Types of AI How to Drive Business Value More. It is a way to avoid overfitting and underfitting in Machine Learning models. Ensemble machine learning can be mainly categorized into bagging and boosting.

Both techniques use random sampling to generate multiple. Improve the quality and fairness of your ML training data to build trustworthy AI models. Bagging is a parallel ensemble learning method whereas Boosting is a sequential ensemble learning method.

Explore Our Curated Collection Of Latest Insights Reports Guides. Given a training dataset D x n y n n 1 N and a separate test set T x t t 1 T we build and deploy a bagging model with the following procedure. Bagging algorithm Introduction.

Bagging on decision trees is done by creating bootstrap samples from the training data set and then built trees on bootstrap samples and. Creating multiple datasets. A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their.

Boosting should not be confused with Bagging which is the other main family of ensemble methods. The 5 biggest myths dissected to help you understand the truth about todays AI landscape. Machine Learning Models Explained.

Multiple subsets are created from the original data set with equal tuples selecting observations with replacement. Bagging aims to improve the accuracy and performance. In bagging a random sample.

Ad State-of-the-Art Technology Enabling Responsible ML Development Deployment and Use. While in bagging the weak learners are trained in parallel using randomness in. A Curated Collection of Technical Blogs Code Samples and Notebooks for Machine Learning.

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. This recursive nature of picking the samples at random with replacement can improve the accuracy of an unstable machine. Get the Free eBook.

As we said already Bagging is a method of merging the same type of predictions. The bagging technique is useful for both regression and statistical classification. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

Implementation Steps of Bagging. Decision trees have a lot of similarity and co-relation in their. Now as we have already discussed prerequisites lets jump to this blogs.

Lets assume we have a sample dataset of 1000. Ad Debunk 5 of the biggest machine learning myths. Ad State-of-the-Art Technology Enabling Responsible ML Development Deployment and Use.

Bagging Classifier Python Code Example Data Analytics

Guide To Ensemble Methods Bagging Vs Boosting

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Ml Bagging Classifier Geeksforgeeks

Learn Ensemble Methods Used In Machine Learning

A Bagging Machine Learning Concepts

Ensemble Learning Bagging Boosting Stacking And Cascading Classifiers In Machine Learning Using Sklearn And Mlextend Libraries By Saugata Paul Medium

Bootstrap Aggregating Wikiwand

What Is Bagging In Machine Learning And How To Perform Bagging

Learn Ensemble Methods Used In Machine Learning

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bootstrap Aggregating Bagging Youtube

Ensemble Learning Bagging Boosting By Fernando Lopez Towards Data Science